Objective

This article aims to provide an understanding of Kubernetes, an open-source project for container orchestration. By the end of this article, we will have looked at the following:

What Kubernetes is, its history, and how it works

Understand the components that make up Kubernetes architecture and how they interact with each

other

Identify the key features of Kubernetes and understand their significance in container

orchestration

Learn how Kubernetes is used in modern software engineering practices and its benefits and

limitations

Understand the factors that influence the decision to use Kubernetes, and when it may not be the

best choice

Ways to contribute to the Kubernetes community - as a developer and a community member.

Introduction

Google created the open-source Kubernetes project for container orchestration. It was initially created to control the enormous number of containers Google was deploying on an internal basis. Kubernetes automates the deployment, scaling, and management of containerized applications, making it easier to deploy and manage containerized applications at scale.

An application and its dependencies are packaged as part of the containerization process so that they can be run and deployed in any environment. Automating the deployment, scaling, and maintenance of containerized applications is known as container orchestration. Since it makes managing containers simpler and makes it simpler to deploy and manage them at scale, Kubernetes is a well-liked container orchestration technology.

Since its initial release in 2014, Kubernetes has grown to become one of the most popular container orchestration platforms. The Cloud Native Computing Foundation (CNCF), an open-source software foundation that is a component of the Linux Foundation, is now responsible for maintaining the project.

While deploying applications, Kubernetes offers the following benefits:

Scalability: Kubernetes makes it simple to scale up or down applications as needed, letting you quickly adapt to changes in traffic and demand.

Availability: Kubernetes keeps your applications constantly accessible by automatically identifying and swapping out malfunctioning containers or nodes.

Portability: Kubernetes makes it simple to install and operate apps in any setting, including on-premises and cloud environments.

Automation: Kubernetes makes administering containerized apps at scale easier by automating many of the tasks required in their deployment and administration.

To summarize the introduction, Kubernetes is a crucial tool for contemporary software engineering techniques since it streamlines container management and facilitates their deployment and scale-out management.

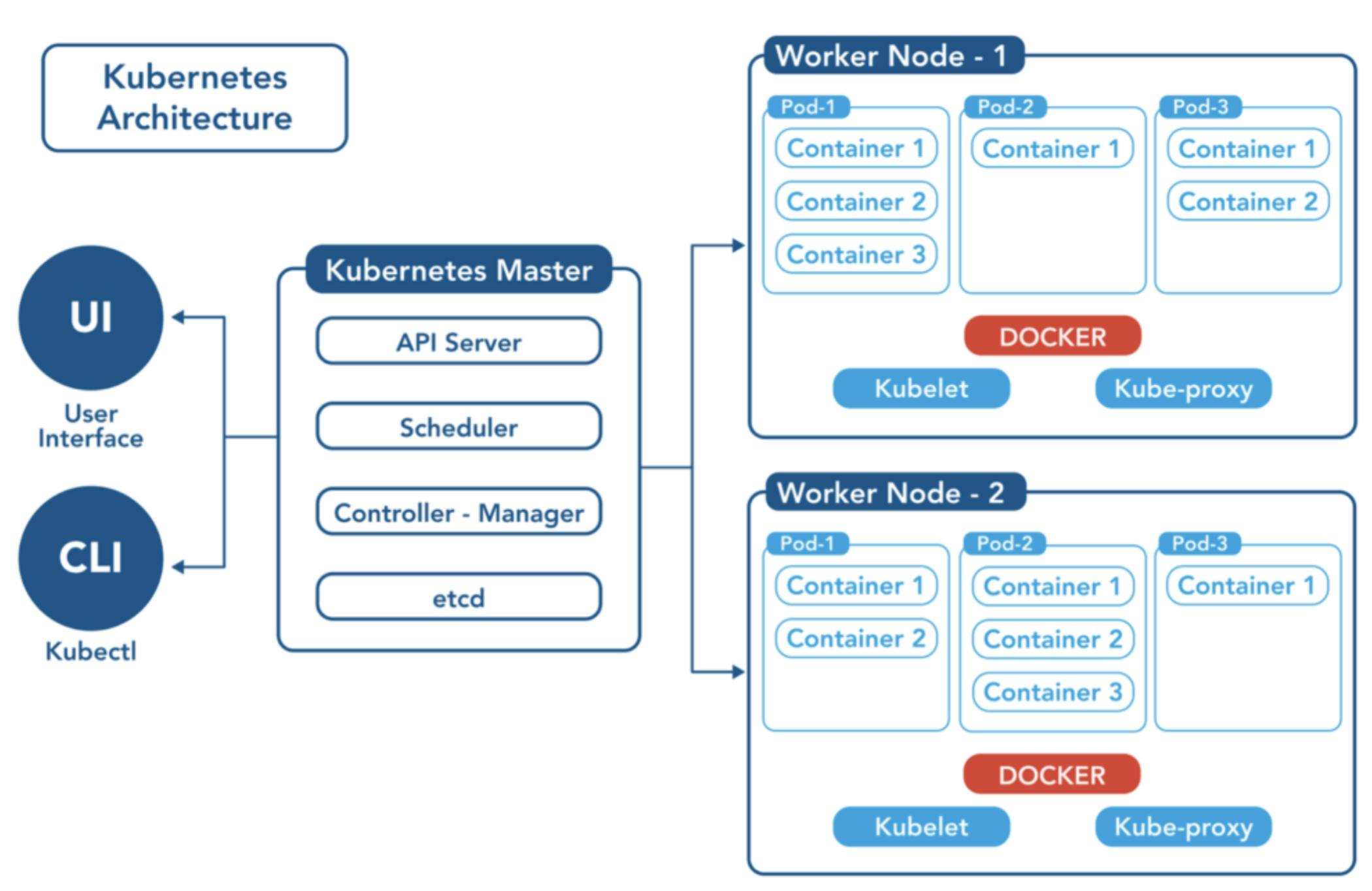

Kubernetes Architecture

The Kubernetes architecture consists of a number of parts that interact to deliver container orchestration services. The Kubernetes control plane, also referred to as the master node, and worker nodes are two categories into which these parts can be separated.

Kubernetes Control Plane:

The cluster's overall state is managed and maintained by the Kubernetes control plane. It is made up of a number of parts, such as:

API Server: This part acts as a conduit between the control plane and worker nodes and the Kubernetes API. Processing requests to create, update, or delete Kubernetes objects (such as pods, services, and replication controllers) and validating those requests prior to altering the cluster's state are the responsibilities of the API server.

etcd: This distributed key-value store is where the cluster's configuration information is kept. The desired status of the cluster, such as which pods should be operating on which nodes, is data that is saved in etcd.

Controller Manager: This component oversees the controllers in charge of keeping the cluster in the desired state. Each controller is in charge of keeping an eye on a specific Kubernetes object (such as a replication controller) and taking appropriate action to make sure that the cluster's actual state and the desired state are same.

Scheduler: The scheduler is in charge of planning the execution of pods, which are collections of one or more containers, on worker nodes. To decide where to schedule each pod, the scheduler takes into account variables like resource availability and node affinity.

Worker Nodes:

The machines that run containers and are supervised by the control plane are known as worker nodes. They are composed of a number of elements, such as:

Kubelet: This agent runs on every worker node and is in charge of looking after the containers there. When the kubelet wants to know which containers to run or when to start or stop them, it connects with the API server.

kube-proxy: Network communication between pods and services is managed by this component. Depending on the destination IP address and port, the kube-proxy, which is installed on each worker node, directs traffic to the proper pod or service.

Container Runtime: The containers are run by this program on each node. Docker and CRI-O are just two of the container runtimes that Kubernetes supports.

Kubernetes Master Node and Worker Nodes:

The management of containers is jointly done by the Kubernetes master node and worker nodes. The Kubernetes API sends requests to the control plane components, which schedule the containers to operate on worker nodes after receiving such requests. Once the containers are started and managed by the worker nodes, they are managed by the Kubelet and container runtime.

To summarize the architecture discussion, the Kubernetes architecture is built to deliver fault-tolerant, highly scalable container orchestration services. We can better understand how Kubernetes delivers container orchestration services by comprehending the parts that make up the architecture of Kubernetes and how they interact.

Key Features of Kubernetes

Kubernetes is an essential tool for container orchestration since it offers a number of essential features.

Kubernetes has a number of important features, including:

Networking:

Many networking options are offered by Kubernetes, enabling communication between containers and with external networks. Network address translation (NAT), software-defined networking (SDN), and overlay networks are just a few of the several network plugins that Kubernetes supports. A built-in service discovery mechanism in Kubernetes also enables containers to find other containers and services in the cluster.

Every pod in the cluster may communicate with every other pod in the cluster without the usage of NAT or port forwarding thanks to Kubernetes' flat network model. This offers a streamlined networking approach that makes containerized application deployment and management simple.

Storage Management:

Many methods are available in Kubernetes for controlling storage in a container-based system. Network-attached storage (NAS), storage area networks (SAN), and cloud-based storage are just a few of the storage plugins that Kubernetes supports. A built-in volume abstraction that Kubernetes offers enables containers to access storage resources.

Data can be saved even if a container is destroyed because Kubernetes volumes are generated independently of the containers that consume them. Moreover, Kubernetes has a number of volume types that can be used to store data, such as emptyDir volumes. These volumes are created when a pod is created and are removed when the pod is deleted.

Security:

In order to safeguard containers and the cluster, Kubernetes offers a number of security features. Several authentication and authorization methods, such as token-based authentication and role-based access management, are supported by Kubernetes (RBAC). In addition, Kubernetes offers a number of security contexts that let containers operate with various levels of privilege as well as a number of tools that enable administrators to secure the cluster, including network policies and pod security policies.

Administrators can provide security contexts for containers using Kubernetes, including user IDs, group IDs, and Linux privileges. Several other features that Kubernetes offers enable administrators to secure the cluster including network policies, which let administrators specify the network traffic that is permitted to and from a pod, and pod security policies, which let administrators define the network traffic that is allowed to and from a pod.

Automation and Scaling:

There are a number of capabilities offered by Kubernetes that enable the automation and scaling of containerized applications. Deployment controllers and replica set controllers are just a couple of the controllers that Kubernetes enables so that containers can be deployed, scaled, and updated automatically. Moreover, Kubernetes offers other methods for scaling applications, such as horizontal pod autoscaling, which adjusts the number of pods based on resource usage.

A containerized application's intended state can be specified by administrators using Kubernetes controllers, and Kubernetes will manage the application's real state to match the desired state automatically. Applications running in containers may now be deployed and managed at scale with ease.

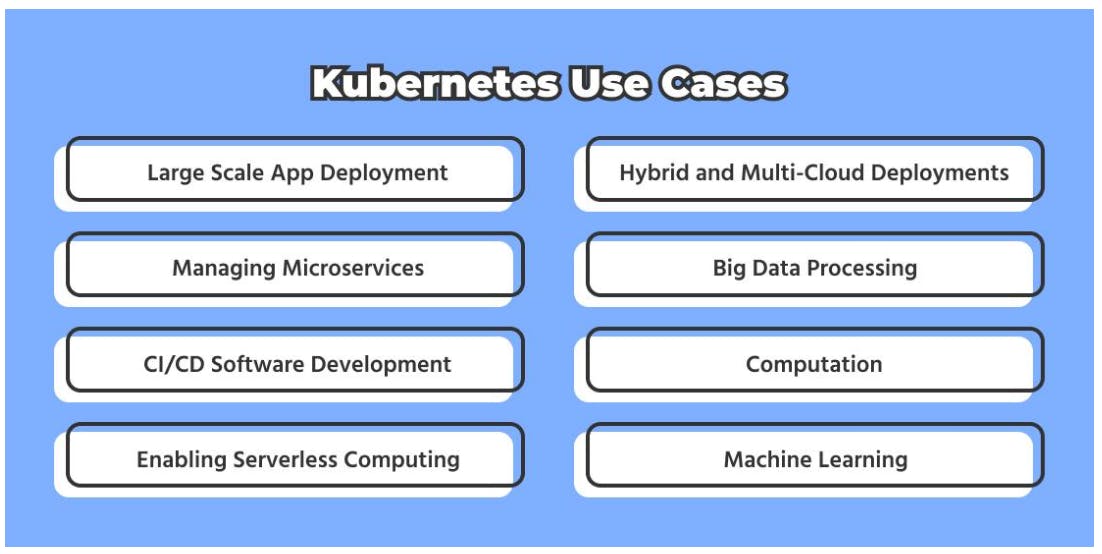

Use of Kubernetes in Modern Software Engineering Practices

Particularly in the DevOps pipeline, Kubernetes has emerged as a crucial tool in modern software

engineering methods.

Following are some examples of how Kubernetes is utilized in modern software engineering methodologies:

Application Development:

Application deployment is made quick and easy with Kubernetes. Developers may deploy apps quickly and easily with Kubernetes, and Kubernetes will make sure that the applications are functioning as expected. For handling application updates and rollbacks, Kubernetes offers a number of different tools.

Scaling:

Applications can be horizontally scaled with Kubernetes by adding or removing containers. Developers can scale up or down apps using Kubernetes as necessary to meet fluctuating demand. Moreover, Kubernetes offers a number of ways for autonomously scaling applications based on resource usage.

Continuous Integration and Continuous Deployment (CI/CD):

To automate the deployment of containerized apps, Kubernetes can be incorporated into the CI/CD process. Developers may rapidly and effectively deploy apps using Kubernetes in the CI/CD pipeline, and Kubernetes will ensure that the applications are functioning as planned.

Service Discovery and Load Balancing:

Containers can find other containers and services in the cluster using a built-in service discovery mechanism provided by Kubernetes. Moreover, Kubernetes has built-in load-balancing features that let traffic be divided equally among containers.

Benefits of using Kubernetes:

Simplifies application deployment and management

Provides automated scaling of applications

Enables continuous delivery of applications

Provides built-in mechanisms for service discovery and load balancing

Provides a scalable and fault-tolerant infrastructure for containerized applications

Limitations of Using Kubernetes:

Requires a steep learning curve

Can be complex to set up and manage

Can be resource-intensive

Requires expertise in Kubernetes to manage effectively

To summarize this section, the DevOps pipeline, in particular, uses Kubernetes as a critical tool in modern software engineering methodologies. We can better comprehend how Kubernetes is used in modern software engineering methods by studying how it fits into the DevOps pipeline and how it can be utilized to streamline application deployment.

When to and When Not to Use Kubernetes

While Kubernetes is a powerful tool for container orchestration, it may not be the best choice in every situation. Here are some factors to consider when deciding whether to use Kubernetes:

Application Size and Complexity:

Kubernetes is well-suited for managing large and complex applications that consist of many containers. If the application is small and simple, however, Kubernetes may be overkill.

Number of Containers:

Kubernetes is best suited for managing large numbers of containers. If there are only a few containers that need to be managed, a simpler container orchestration solution may be more appropriate.

Availability of Resources:

Kubernetes can be resource-intensive, both in terms of hardware and personnel. If the necessary hardware resources or personnel resources are not available, Kubernetes may not be the best choice.

Alternative Container Orchestration Solutions:

There are several alternative container orchestration solutions to Kubernetes, such as Docker Swarm, Mesos, and Nomad. Each solution has its own strengths and weaknesses, and the choice of solution will depend on the specific requirements of the application.

When to Use Kubernetes:

Large and complex applications

Applications with many containers that need to be managed

High availability requirements

Need for advanced automation and scaling capabilities

When Not to Use Kubernetes:

Small and simple applications

Applications with few containers that need to be managed

Limited hardware or personnel resources

Lack of need for advanced automation and scaling capabilities

To summarize this section, by understanding the factors that influence the decision to use Kubernetes and the alternative container orchestration solutions available, we can make informed decisions about whether to use Kubernetes in a given scenario or not.

Contributing to Kubernetes Community

Because Kubernetes is an open-source project, anyone can contribute to it. We can contribute to the Kubernetes community in the following ways:

Understanding the Kubernetes Development Process:

The Kubernetes development process is structured around a set of GitHub repositories. Anyone can submit a pull request to one of these repositories to contribute code to the project. Before submitting a pull request, it's important to review the Kubernetes contributing guidelines and to make sure that the code follows the Kubernetes coding standards.

Submitting Code Contributions:

To submit a code contribution to Kubernetes, We will need to fork the appropriate repository on GitHub, make our changes, and then submit a pull request. The pull request will be reviewed by the Kubernetes community, and if the changes are accepted, they will be merged into the main codebase.

Getting Involved in the Kubernetes Community:

The Kubernetes community is a vibrant and active community with many opportunities for developers or community members to get involved. Here are some ways in which either party can get involved:

Join the Kubernetes Slack channel to ask questions and get involved in community discussions

Attend Kubernetes community events, such as Kubernetes Meetups or Kubernetes Contributor

Summits

Join a Kubernetes special interest group (SIG) to get involved in specific areas of the project,

such as networking or storage

Contribute to the Kubernetes documentation by submitting pull requests to the appropriate

GitHub repository

By getting involved in the Kubernetes community, we can not only contribute to the project but also learn from other contributors and gain valuable experience in open-source development.

References

https://github.com/kubernetes/community/blob/master/contributors/guide/README.md

https://kubernetes.io/docs/concepts/overview/kubernetes-api/

https://scand.com/company/blog/kubernetes-use-cases-and-trends

https://www.index.dev/post/kubernetes-for-software-engineers-what-no-one-tells-you-but-you-ne

ed-to-know

https://learningdaily.dev/why-and-when-you-should-use-kubernetes-8b50915d97d8

https://www.infoworld.com/article/3173266/4-reasons-you-should-use-kubernetes.html